This is part of my series on Azure Authorization.

- Azure Authorization – The Basics

- Azure Authorization – Azure RBAC Basics

- Azure Authorization – actions and notActions

- Azure Authorization – Resource Locks and Azure Policy denyActions

- Azure Authorization – Azure RBAC Delegation

Hello again fellow geeks!

I typically avoid doing multiple posts in a month for sanity purposes, but quite possibly one of the most awesome Azure RBAC features has gone generally available under the radar. Azure RBAC Delegation is going to be one of those features that after reading this post, you will immediately go and implement. For those of you coming from AWS, this is going to be your IAM Permissions Boundary-like feature. It addresses one of the major risks to Azure’s authorization model and fills a big gap the platform has had in the authorization space.

Alright, enough hype let’s get to it.

Before I dive into the new feature, I encourage you to read through the prior posts in my Azure Authorization series. These posts will help you better understand how this feature fits into the bigger picture.

As I covered in my Azure RBAC Basics post, only security principals with sufficient permissions in the Microsoft.Authorization resourced provider can create new Azure RBAC Role Assignments. By default, once a security principal is granted that permission it can then assign any Azure RBAC Role to itself or any other security principal within the Entra ID tenant to it’s immediate scope of access and all child scopes due to Azure RBAC’s inheritance model (tenant root -> management group -> subscription -> resource group -> resource). This means a human assigned a role with sufficient permissions (such as an IAM support team) could accidentally / maliciously assign another privileged role to themselves or someone else and wreak havoc. Makes for a not good late night for anyone on-call.

While the human risk exists, the greater risk is with non-human identities. When an organization passes beyond the click-ops and imperative (az cli, PowerShell, etc) stage and moves on to the declarative stage with IaC (infrastructure-as-code), delivery of those IaC templates (ARM, Bicep, Terraform) are put through a CI/CD (continuous integration / continuous delivery) pipeline. To deploy the code to the cloud platform, these pipeline’s compute need an identity to authenticate to the cloud management plane. In Azure, this is accomplished through a service principal or managed identity. That identity must be granted the specific permissions it needs which is done through Azure RBAC Role assignments.

In the ideal world, as much as can be is put through the pipeline, including role assignments. This means the pipeline needs to be able to create Azure RBAC Role assignments which means it needs permissions for the Microsoft.Authorization resource provider (or relevant built-in roles with Owner being common).

To mitigate the risk of one giant blast radius with one pipeline, organizations will often create multiple pipelines with separate pipelines for production and non-production, pipelines for platform components (networking, logging, etc) and others for the workload (possibly one for workload components such as an Event Hub and another separate pipeline for code). Pipelines will be given separate security principals with permissions at different scopes with Central IT typically owning pipelines at higher scopes (management groups) and business units owning pipelines at lower scopes (subscription or resource group).

At the end of the day you end up with lots of pipelines and lots of non-humans that hold the Owner role at a given scope. This multiplies the risk of any one of those pipeline identities being misused to grant someone or something permissions beyond what it needs. Organizations typically mitigate through this automated and manual gates which can get incredibly complex at scale.

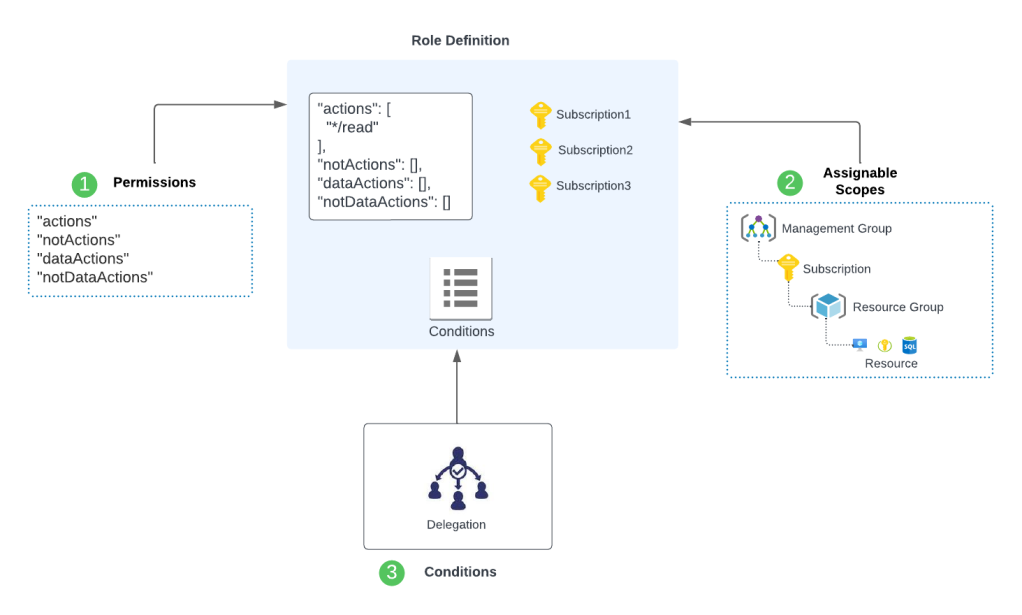

This is where Azure RBAC Delegation really shines. It allows you to wrap restrictions around how a security principal can exercise its Microsoft.Authorization permissions. These restrictions can include:

- Restricting to a specific set of Azure RBAC Roles

- Restricting to a specific security principal type (user, service principal, group)

- Restricting whether it can create new assignments, update existing assignments, or delete assignments

- Restricting it to a specific set of security principals (specific set of groups, users, etc)

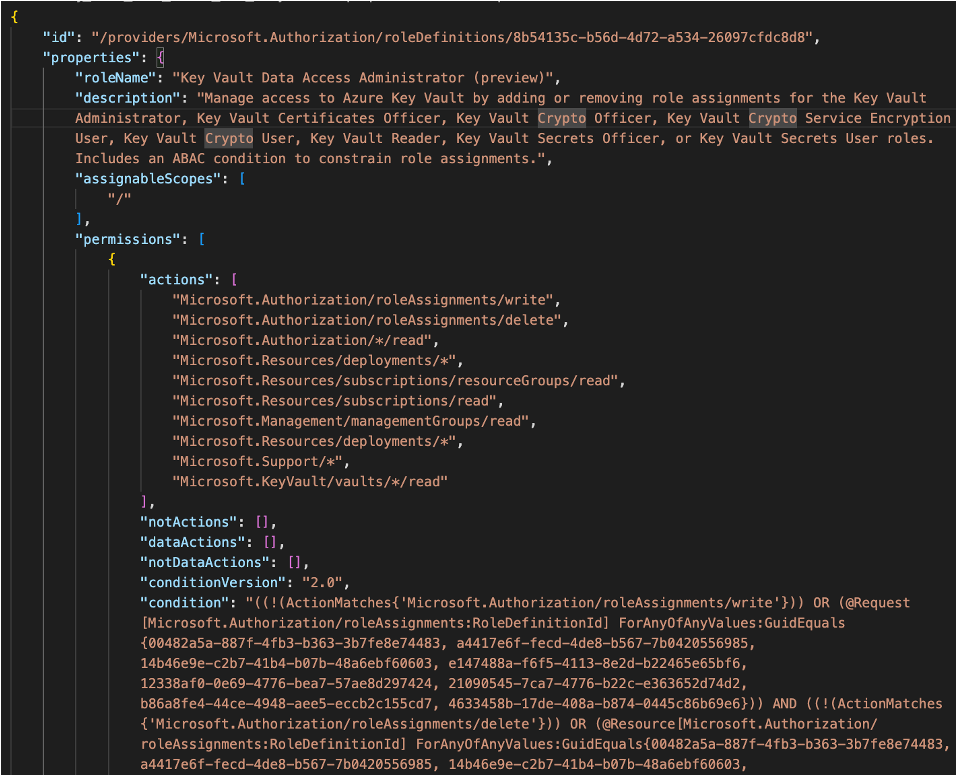

So how does it do it? Well if you read my prior post, you’ll remember I mentioned the new property included in Azure RBAC Role assignments called conditions. RBAC Delegation uses this new property to wrap those restrictions around the role. Let’s look at an example role using one of the new built-in role Microsoft has introduced called the Role Based Access Control Administrator.

Let’s take a look at the role definition first.

{

"id": "/subscriptions/b3b7aae7-c6c1-4b3d-bf0f-5cd4ca6b190b/providers/Microsoft.Authorization/roleDefinitions/f58310d9-a9f6-439a-9e8d-f62e7b41a168",

"properties": {

"roleName": "Role Based Access Control Administrator",

"description": "Manage access to Azure resources by assigning roles using Azure RBAC. This role does not allow you to manage access using other ways, such as Azure Policy.",

"assignableScopes": [

"/"

],

"permissions": [

{

"actions": [

"Microsoft.Authorization/roleAssignments/write",

"Microsoft.Authorization/roleAssignments/delete",

"*/read",

"Microsoft.Support/*"

],

"notActions": [],

"dataActions": [],

"notDataActions": []

}

]

}

}In the above role definition, you can see that the role has been granted only permissions necessary to create, update, and delete role assignments. This is more restrictive than an Owner or User Access Administrator which have a broader set of permissions in the Microsoft.Authorization resource provider. It makes for a good candidate for a business unit pipeline role versus Owner since business units shouldn’t need to be managing Azure Policy or Azure RBAC Role Definitions. That responsibility should within Central IT’s scope.

You do not have to use this built-in role or can certainly design your own. For example, the role above does not include the permissions to manage a resource lock and this might be something you want the business unit to be able to manage. This feature is also supported for custom role. In the example below, I’ve cloned the Role Based Access Control Administrator but added additional permissions to manage resource locks.

{

"id": "/subscriptions/b3b7aae7-c6c1-4b3d-bf0f-5cd4ca6b190b/providers/Microsoft.Authorization/roleDefinitions/dd681d1a-8358-4080-8a37-9ea46c90295c",

"properties": {

"roleName": "Privileged Test Role",

"description": "This is a test role to demonstrate RBAC delegation",

"assignableScopes": [

"/subscriptions/b3b7aae7-c6c1-4b3d-bf0f-5cd4ca6b190b"

],

"permissions": [

{

"actions": [

"Microsoft.Authorization/roleAssignments/write",

"Microsoft.Authorization/roleAssignments/delete",

"*/read",

"Microsoft.Support/*",

"Microsoft.Authorization/locks/read",

"Microsoft.Authorization/locks/write",

"Microsoft.Authorization/locks/delete"

],

"notActions": [],

"dataActions": [],

"notDataActions": []

}

]

}

}If I were to attempt to create a new role assignment for this custom role, I’ve given the ability to associate the role with a set of conditions.

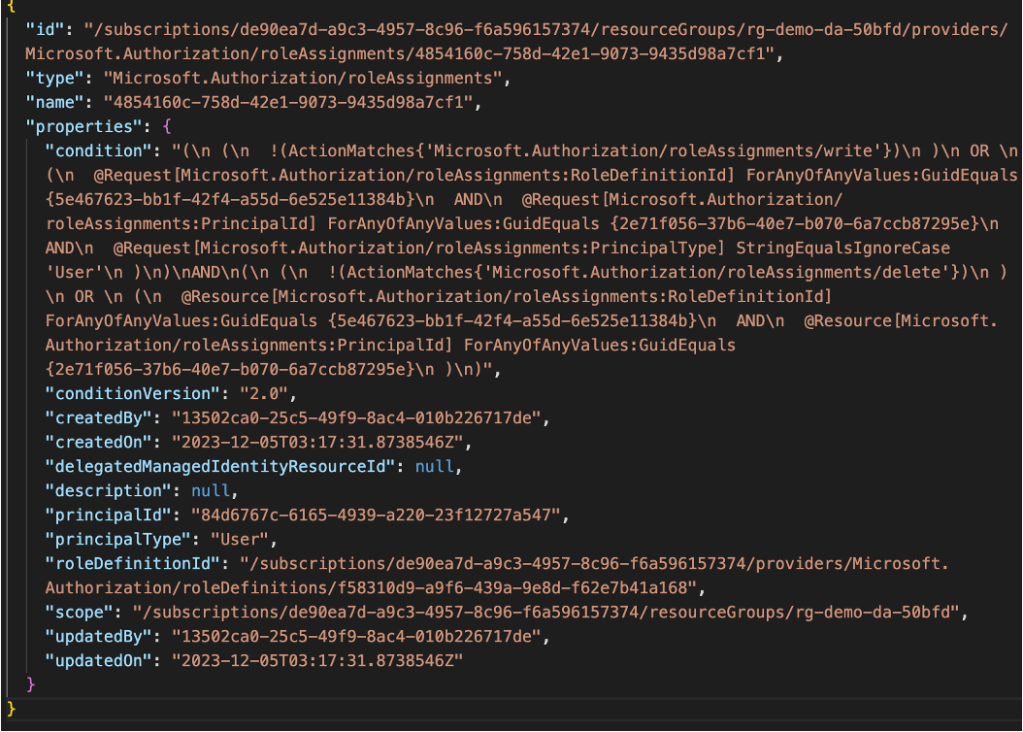

Let’s take an example where I want a security principal to be able to create new role assignments but only for the built-in role of Virtual Machine Contributor. The condition in my role would like the below:

(

(

!(ActionMatches{'Microsoft.Authorization/roleAssignments/write'})

)

OR

(

@Request[Microsoft.Authorization/roleAssignments:RoleDefinitionId] ForAnyOfAnyValues:GuidEquals {9980e02c-c2be-4d73-94e8-173b1dc7cf3c}

AND

@Request[Microsoft.Authorization/roleAssignments:PrincipalType] StringEqualsIgnoreCase 'User'

)

)

AND

(

(

!(ActionMatches{'Microsoft.Authorization/roleAssignments/delete'})

)

OR

(

@Request[Microsoft.Authorization/roleAssignments:PrincipalType] StringEqualsIgnoreCase 'User'

)

)Yes, I know the conditional language is ugly as hell. Thankfully, you won’t have to write this yourself which I will demonstrate in a few. First, I want to walk you through the conditional language.

When using RBAC Delegation, you can associate one or more conditions to an Azure RBAC role assignment. Like most conditional logic in programming, you can combine conditions with AND and OR. Within each condition you have an action and expression. When the condition is evaluated the action is first checked for a match. In the example above I have !(ActionMatches{‘Microsoft.Authorization/roleAssignments/write’}) which will match any permission that isn’t write which will cause the condition to result in true and will allow the access without evaluating the expression below. If the action is write, then this evaluated to false and the expression is then evaluated. In the example above I have two expressions. The first expression checks whether the request I’m making to create a role assignment is for the role definition id for the Virtual Machine Contributor. The second expression checks to see if the principal type in the role assignment is of type user. If either of these evaluate to false, then access is denied. If it evaluates to true, it moves on to the next condition which limits the security principal to deleting role assignments assigned to users.

No, you do not need to learn this syntax. Microsoft has been kind enough to provide a GUI-based conditions tool which you can use to build your conditions and view as code you can include in your templates.

Pretty cool right? The public documentation walks through a number of different scenarios where you can use this, so I’d encourage you to read it to spur ideas beyond the pipeline example I have given in this post. However, the real value out of this feature is stricter control of what how those pipeline identities can affect authorization.

So what are you takeaways for this post?

- Get this feature implemented YESTERDAY. This is minimal overhead with massive security return.

- Use the GUI-based condition builder to build your conditions and then copy the JSON into your code.

- Take some time to learn the conditional syntax. It’s used in other places in Azure RBAC and will likely continue to grow in usage.

- Start off using the built-in Role Based Access Control Administrator role. If your business units need more than what is in there (such as managing resource locks) clone it and add those permissions.

Well folks, I hope you got some value out of this post. Add a to-do to get this in place in your Azure estate as soon as possible!