Update: In November 2019 AWS introduced support for integration between Azure AD and AWS SSO. The integration offers a ton more features, including out of the box support for multiple AWS accounts. I highly recommend you go that route if you’re looking to integrate the two platforms. Check out my series on the new integration here.

Today I will continue the journey into the integration between Azure AD and Amazon Web Services. In my first entry I covered the reasons why you’d want to integrate Azure AD with AWS and provided a high-level overview of how the solution works. The remaining entries in this series will cover the steps involved in completing the integration including deep dives into the inner workings of the solution.

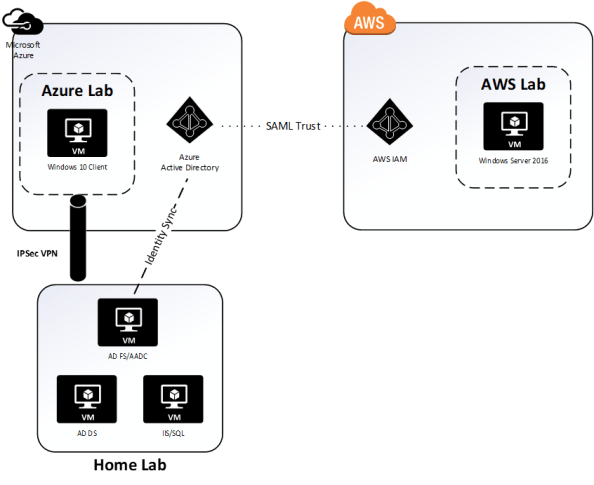

Let me start out by talking about the testing environment I’ll be using for this series.

The environment includes three virtual machines (VMs) running on Windows Server 2016 Hyper V on a server at my house. The virtual machines consists three servers running Windows Server 2016 with one server acting as a domain controller for the journeyofthegeek.local Active Directory (AD) forest, another server running Active Directory Federation Services (AD FS) and Azure AD Connect (AADC), and the third server running MS SQL Server and IIS. The IIS instance hosts a .NET sample federated application published by Microsoft.

In Microsoft Azure I have a single Vnet configured for connectivity to my on-premises lab through a site-to-site IPSec virtual private network (VPN) I’ve setup with pfSense. Within the Vnet exists a single VM running Windows 10 that is domain-joined to the journeyofthegeek.local AD domain. The Azure AD tenant providing the identity backend for the Microsoft Azure subscription is synchronized with the journeyofthegeek.local AD domain using Azure AD Connect and is associated with the domain journeyofthegeek.com. Authentication to the Azure AD tenant is federated using my instance of AD FS. I’m not synchronizing passwords and am using an alternate login ID with the user principal name being synchronized to Azure AD being stored in the AD attribute msDS-CloudExtensionAttribute1. The reason I’m still configured to use an alternate login ID was due to some testing I needed to do for a previous project.

I created a single test user in the journeyofthegeek.local Active Directory domain named Rick Sanchez with a user principal name (UPN) of rick.sanchez@journeyofthegeeklocal and msDS-CloudExtensionAttribute1 of rick.sanchez@journeyofthegeek.com. The only attribute to note that the user has populated is the mail attribute which has the value of rick.sanchez@journeyofthegeek.com. The user is being synchronized to Azure AD via the Azure AD Connect instance.

In AWS I have a single elastic compute cloud (EC2) instance running Windows Server 2016 within a virtual private cloud (VPC). I’ll be configuring Azure AD as an identity provider associated with the AWS account and will be associating an AWS IAM role named AzureADEC2Admins. The role will grant full admin rights over the management of the EC2 instances associated to the account via the AmazonEC2FullAccess permissions policy.

Let’s begin shall we?

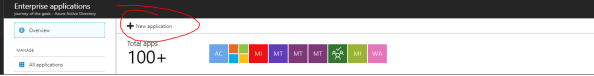

The first step I’ll be taking is to log into the Azure Portal as an account that is a member of the global admins and navigate to the Azure Active Directory blade. From there I select Enterprise Applications blade and hit the New Application link.

I then search the application gallery for AWS, select the AWS application, accept the default name, and hit the Add button. Azure AD will proceed to add the application and will then jump to a quick start page. So what exactly does it mean to add an application to Azure AD? Good question, for that we’ll want to use the Azure AD cmdlets. You can reference this link.

Before we jump into running cmdlets, let’s talk very briefly about the concept of application identities in AAD. If you’ve managed Active Directory Domain Services (AD DS), you’re very familiar with the concept of service accounts. When you needed an application (let’s call it a non-human to be more in-line with industry terminology) to access AD-integrated resources directly or on-behalf of a user you would create a security principal to represent the non-human. That security principal could be a user object, managed service account object, or group managed service account object. You would then grant that security principal rights and permissions over the resource or grant it the right to impersonate a user and access the resource on the user’s behalf. The part we want to focus in on is the impersonation or delegation to access a resource on the behalf of a user. In AD DS that delegation is accomplished through the Kerberos protocol.

When we shift over to AAD the same basic concepts still exist of creating a security principal to represent the application and granting that application direct or delegated access to a resource. The difference is the protocol handling the access shifts from Kerberos to OAuth 2.0. One thing many people become confused about is thinking that OAuth handles authentication. It doesn’t. It has nothing do with authentication and everything to do with authorization, or more clearly delegation. When we add an application the AAD a service principal object and sometimes application object (in AWS instance both are created) are created in the AAD tenant to represent the application. I’m going to speak to the service principal object for the AWS integration, but you can read through this link for a good walkthrough on application and service principal objects and how they differ.

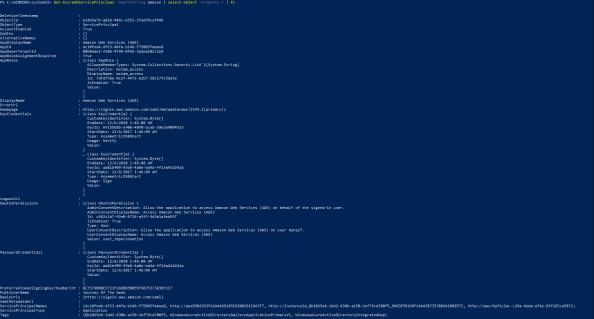

Now back to AWS. So we added the application and we now have a service principal object in our tenant representing AWS. Here is a few of the attributes for the object pulled via PowerShell.

Review of the attributes for the object provide a few pieces of interesting information. We’ll get to experiment more with what these mean when we start doing Fiddler captures, but let’s talk a bit about them now. The AppRoles attribute provides a single default role of msiam_access. Later on we’ll be adding additional roles that will map back to our AWS IAM roles.

Next up we have the KeyCredentials which contains two entries. This attribute took me a while to work out. In short, based upon the startDate, I think these two entries are referencing the self-signed certificate included in the IdP metadata that is created after the application is added to the directory. I’ll cover the IdP metadata in the next entry.

The Oauth2Permissions are a bit funky for this use case since we’re not really allowing the application to access AWS on our behalf, but rather asking it to produce a SAML assertion asserting our identity. Maybe the delegation can be thought of as delegating Azure AD the right to create logical security tokens representing our users that can be used to assert an identity to AWS.

The PasswordCredentials contains a single entry which shares the same KeyID as the KeyCredential. As best I can figure from reading the documentation is this would normally contain entries for client keys when not using certificate authentication. Given that it contains a single entry with the same KeyID as the KeyCredential for signing, I can only guess it will contain an entry even with a certificate is used to authenticate the application.

The last attributes of interest are the PreferredTokenSigningKeyThumbprint which references the certificate within the IdP metadata and the replyURLs which is the assertion consumer URI for AWS.

So yeah, that’s what happens in those 2 or 3 seconds the AWS application is registered with Azure AD. I found it interesting how the service principal object is used to represent trust between Azure AD and AWS and all the configuration information attached to the object after the application is simply added. It’s nice to have some of the configuration work done for us out of the box, but there is much more to do.

In the next entry I’ll walk through the Quick Start for the AWS application configuration and explore the metadata Azure AD creates.

The journey continues in my third entry.