This is part of my series on GenAI Services in Azure:

- Azure OpenAI Service – Infra and Security Stuff

- Azure OpenAI Service – Authentication

- Azure OpenAI Service – Authorization

- Azure OpenAI Service – Logging

- Azure OpenAI Service – Azure API Management and Entra ID

- Azure OpenAI Service – Granular Chargebacks

- Azure OpenAI Service – Load Balancing

- Azure OpenAI Service – Blocking API Key Access

- Azure OpenAI Service – Securing Azure OpenAI Studio

- Azure OpenAI Service – Challenge of Logging Streaming ChatCompletions

- Azure OpenAI Service – How To Get Insights By Collecting Logging Data

- Azure OpenAI Service – How To Handle Rate Limiting

- Azure OpenAI Service – Tracking Token Usage with APIM

- Azure AI Studio – Chat Playground and APIM

- Azure OpenAI Service – Streaming ChatCompletions and Token Consumption Tracking

- Azure OpenAI Service – Load Testing

Updates:

- 10/29/2024 – Microsoft has announced a deployment option referred to as a data zone (https://azure.microsoft.com/en-us/blog/accelerate-scale-with-azure-openai-service-provisioned-offering/). Data zones can be thought of as data sovereignty boundaries incorporated into the existing global deployment option. This will significantly ease load balancing so you will no longer need to deploy individual regional instances and can instead deploy a single instance with a data zone deployment within a single subscription. As you hit the cap for TPM/RPM within that subscription, you can then repeat the process with a new subscription and load balance across the two. This will result in fewer backends and a more simple load balancing setup.

Welcome back folks!

Today I’m back again talking load balancing in AOAI (Azure OpenAI Service). This is an area which has seen a ton of innovation over the past year. From what began as a very basic APIM (API Management) policy snippet providing randomized load balancing was matured to add more intelligence by a great crew out of Microsoft via the “Smart” Load Balancing Policy. Innovative Microsoft folk threw together a solution called PowerProxy which provides load balancing and other functionality without the need for APIM. Simon Kurtz even put together a new Python library to provide load balancing at the SDK-level without the need for additional infrastructure. Lots of great ideas put into action.

The Product Group for APIM over at Microsoft was obviously paying attention to the focus in this area and have introduced native functionality which makes addressing this need cake. With the introduction of the load balancer and circuit breaker feature in APIM, you can now perform complex load balancing without needing a complex APIM policy. This dropped with a bunch of other Generative AI Gateway (told you this would become an industry term!) features for APIM that were announced this week. These other features include throttling based on tokens consumed (highly sought after feature!), emitting token counts to App Insights, caching completions for optimization of token usage, and a simpler way to onboard AOAI into APIM. Very cool stuff of which I’ll be covering over the next few weeks. For this post I’m going to focus on the new load balancing and circuit breaker feature.

Before I dive into the new feature I want to do a quick review of why scaling across AOAI instances is so important. For each model you have a limited amount of requests and tokens you can pass to the service within a given subscription within a region. These limits vary on a per model basis. If you’re consuming a lot of prompts or making a lot of requests it’s fairly easy to hit these limits. I’ve seen a customer hit the limits within a region with one document processing application. I had another customer who deployed a single Chat Bot in a simple RAG (retrieval augmented generation) that was being used by large swath of their help desk staff and limits were quickly a problem. The point I’m making here is you will hit these limits and you will need to add figure out how to solve it. Solving it is going to require additional instances in different Azure regions likely spread across multiple subscriptions. This means you’ll need to figure out a way to spread applications across these instances to mitigate the amount of throttling your applications have to deal with.

As I covered earlier, there are a lot of ways you can load balancing this service. You could do it at the local application using Simon’s Python library if you need to get something up and running quickly for an application or two. If you have an existing deployed API Gateway like an Apigee or Mulesoft, you could do it there if you can get the logic right to support it. If you want to custom build something from scratch or customize a community offering like PowerProxy you could do that as well if you’re comfortable owning support for the solution. Finally, you have the native option of using Azure APIM. I’m a fan of the APIM option over the Python library because it’s scalable to support hundreds of applications with a GenAI (generative AI) need. I also like it more than custom building something because the reality is most customers don’t have the people with the necessary skill sets to build something and are even less likely to have the bodies to support yet another custom tool. Another benefit of using APIM include the backend infrastructure powering the solution (load balancers, virtual machines, and the like) are Microsoft’s responsibility to run and maintain. Beyond load balancing, it’s clear that Microsoft is investing in other “Generative AI Gateway” types of functionality that make it a strategic choice to move forward with. These other features are very important from a security and operations perspective as I’ve covered in past posts. No, there was not someone from Microsoft holding me hostage forcing me to recommend APIM. It is a good solution for this use case for most customers today.

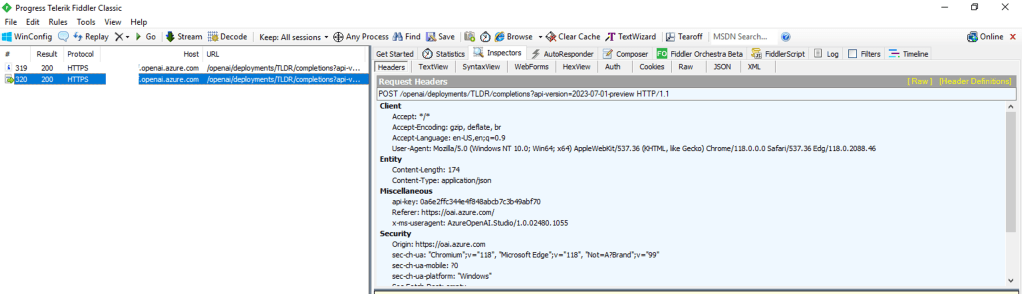

Ok, back to the new load balancing and circuit breaker feature. This new feature allows you to use new native APIM functionality to create a load balancing and circuit breaker policy around your APIM backends. Historically to do this you’d need a complex policy like the “smart” load balancing policy seen below to accomplish this feature set.

<policies>

<inbound>

<base />

<!-- Getting the main variable where we keep the list of backends -->

<cache-lookup-value key="listBackends" variable-name="listBackends" />

<!-- If we can't find the variable, initialize it -->

<choose>

<when condition="@(context.Variables.ContainsKey("listBackends") == false)">

<set-variable name="listBackends" value="@{

// -------------------------------------------------

// ------- Explanation of backend properties -------

// -------------------------------------------------

// "url": Your backend url

// "priority": Lower value means higher priority over other backends.

// If you have more one or more Priority 1 backends, they will always be used instead

// of Priority 2 or higher. Higher values backends will only be used if your lower values (top priority) are all throttling.

// "isThrottling": Indicates if this endpoint is returning 429 (Too many requests) currently

// "retryAfter": We use it to know when to mark this endpoint as healthy again after we received a 429 response

JArray backends = new JArray();

backends.Add(new JObject()

{

{ "url", "https://andre-openai-eastus.openai.azure.com/" },

{ "priority", 1},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

backends.Add(new JObject()

{

{ "url", "https://andre-openai-eastus-2.openai.azure.com/" },

{ "priority", 1},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

backends.Add(new JObject()

{

{ "url", "https://andre-openai-northcentralus.openai.azure.com/" },

{ "priority", 1},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

backends.Add(new JObject()

{

{ "url", "https://andre-openai-canadaeast.openai.azure.com/" },

{ "priority", 2},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

backends.Add(new JObject()

{

{ "url", "https://andre-openai-francecentral.openai.azure.com/" },

{ "priority", 3},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

backends.Add(new JObject()

{

{ "url", "https://andre-openai-uksouth.openai.azure.com/" },

{ "priority", 3},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

backends.Add(new JObject()

{

{ "url", "https://andre-openai-westeurope.openai.azure.com/" },

{ "priority", 3},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

backends.Add(new JObject()

{

{ "url", "https://andre-openai-australia.openai.azure.com/" },

{ "priority", 4},

{ "isThrottling", false },

{ "retryAfter", DateTime.MinValue }

});

return backends;

}" />

<!-- And store the variable into cache again -->

<cache-store-value key="listBackends" value="@((JArray)context.Variables["listBackends"])" duration="60" />

</when>

</choose>

<authentication-managed-identity resource="https://cognitiveservices.azure.com" output-token-variable-name="msi-access-token" ignore-error="false" />

<set-header name="Authorization" exists-action="override">

<value>@("Bearer " + (string)context.Variables["msi-access-token"])</value>

</set-header>

<set-variable name="backendIndex" value="-1" />

<set-variable name="remainingBackends" value="1" />

</inbound>

<backend>

<retry condition="@(context.Response != null && (context.Response.StatusCode == 429 || context.Response.StatusCode >= 500) && ((Int32)context.Variables["remainingBackends"]) > 0)" count="50" interval="0">

<!-- Before picking the backend, let's verify if there is any that should be set to not throttling anymore -->

<set-variable name="listBackends" value="@{

JArray backends = (JArray)context.Variables["listBackends"];

for (int i = 0; i < backends.Count; i++)

{

JObject backend = (JObject)backends[i];

if (backend.Value<bool>("isThrottling") && DateTime.Now >= backend.Value<DateTime>("retryAfter"))

{

backend["isThrottling"] = false;

backend["retryAfter"] = DateTime.MinValue;

}

}

return backends;

}" />

<cache-store-value key="listBackends" value="@((JArray)context.Variables["listBackends"])" duration="60" />

<!-- This is the main logic to pick the backend to be used -->

<set-variable name="backendIndex" value="@{

JArray backends = (JArray)context.Variables["listBackends"];

int selectedPriority = Int32.MaxValue;

List<int> availableBackends = new List<int>();

for (int i = 0; i < backends.Count; i++)

{

JObject backend = (JObject)backends[i];

if (!backend.Value<bool>("isThrottling"))

{

int backendPriority = backend.Value<int>("priority");

if (backendPriority < selectedPriority)

{

selectedPriority = backendPriority;

availableBackends.Clear();

availableBackends.Add(i);

}

else if (backendPriority == selectedPriority)

{

availableBackends.Add(i);

}

}

}

if (availableBackends.Count == 1)

{

return availableBackends[0];

}

if (availableBackends.Count > 0)

{

//Returns a random backend from the list if we have more than one available with the same priority

return availableBackends[new Random().Next(0, availableBackends.Count)];

}

else

{

//If there are no available backends, the request will be sent to the first one

return 0;

}

}" />

<set-variable name="backendUrl" value="@(((JObject)((JArray)context.Variables["listBackends"])[(Int32)context.Variables["backendIndex"]]).Value<string>("url") + "/openai")" />

<set-backend-service base-url="@((string)context.Variables["backendUrl"])" />

<forward-request buffer-request-body="true" />

<choose>

<!-- In case we got 429 or 5xx from a backend, update the list with its status -->

<when condition="@(context.Response != null && (context.Response.StatusCode == 429 || context.Response.StatusCode >= 500) )">

<cache-lookup-value key="listBackends" variable-name="listBackends" />

<set-variable name="listBackends" value="@{

JArray backends = (JArray)context.Variables["listBackends"];

int currentBackendIndex = context.Variables.GetValueOrDefault<int>("backendIndex");

int retryAfter = Convert.ToInt32(context.Response.Headers.GetValueOrDefault("Retry-After", "-1"));

if (retryAfter == -1)

{

retryAfter = Convert.ToInt32(context.Response.Headers.GetValueOrDefault("x-ratelimit-reset-requests", "-1"));

}

if (retryAfter == -1)

{

retryAfter = Convert.ToInt32(context.Response.Headers.GetValueOrDefault("x-ratelimit-reset-tokens", "10"));

}

JObject backend = (JObject)backends[currentBackendIndex];

backend["isThrottling"] = true;

backend["retryAfter"] = DateTime.Now.AddSeconds(retryAfter);

return backends;

}" />

<cache-store-value key="listBackends" value="@((JArray)context.Variables["listBackends"])" duration="60" />

<set-variable name="remainingBackends" value="@{

JArray backends = (JArray)context.Variables["listBackends"];

int remainingBackends = 0;

for (int i = 0; i < backends.Count; i++)

{

JObject backend = (JObject)backends[i];

if (!backend.Value<bool>("isThrottling"))

{

remainingBackends++;

}

}

return remainingBackends;

}" />

</when>

</choose>

</retry>

</backend>

<outbound>

<base />

<!-- This will return the used backend URL in the HTTP header response. Remove it if you don't want to expose this data -->

<set-header name="x-openai-backendurl" exists-action="override">

<value>@(context.Variables.GetValueOrDefault<string>("backendUrl", "none"))</value>

</set-header>

</outbound>

<on-error>

<base />

</on-error>

</policies>

Complex policies like the above are difficult to maintain and easy to break (I know, I break my policies all of time). Compare that with a policy that does something very similar with the new load balancing and circuit breaker feature.

<policies>

<!-- Throttle, authorize, validate, cache, or transform the requests -->

<inbound>

<set-backend-service backend-id="backend_pool_aoai" />

<base />

</inbound>

<!-- Control if and how the requests are forwarded to services -->

<backend>

<base />

</backend>

<!-- Customize the responses -->

<outbound>

<base />

</outbound>

<!-- Handle exceptions and customize error responses -->

<on-error>

<base />

</on-error>

</policies>A bit simpler eh? With the new feature you establish a new APIM backend of a “pool” type. In this backend you configure your load balancing and circuit breaker logic. In the Terraform template below, I’ve created a load balanced pool that includes three existing APIM backends which are each an individual AOAI instance. I’ve divided the three backends into two priority groups such that the APIM so that APIM will concentrate the requests to the first priority group until a circuit break rule is triggered. I configured a circuit breaker rule that will hold sending additional requests for 1 minute (tripDuration) to a backend if that backend returns a single (count) 429 over the course of 1 minute (interval). You’ll likely want to play with the tripDuration and interval to figure out what works for you.

Priority group 2 will only be used if all the backends in priority group 1 have circuit breaker rules tripped. The use case here might be that your priority group 1 instance is a AOAI instance setup for PTU (provisioned throughput units) and you want overflow to dump down into instances deployed at the standard tier (basically consumption based).

resource "azapi_resource" "symbolicname" {

type = "Microsoft.ApiManagement/service/backends@2023-05-01-preview"

name = "string"

parent_id = "string"

body = jsonencode({

properties = {

circuitBreaker = {

rules = [

{

failureCondition = {

count = 1

errorReasons = [

"Backend service is throttling"

]

interval = "PT1M"

statusCodeRanges = [

{

max = 429

min = 429

}

]

}

name = "breakThrottling "

tripDuration = "PT1M",

acceptRetryAfter = true

}

]

}

description = "This is the load balanced backend"

pool = {

services = [

{

id = "/subscriptions/XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX/resourceGroups/rg-demo-aoai/providers/Microsoft.ApiManagement/service/apim-demo-aoai-jog/backends/openai-3",

priority = 1

},

{

id = "/subscriptions/XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX/resourceGroups/rg-demo-aoai/providers/Microsoft.ApiManagement/service/apim-demo-aoai-jog/backends/openai-1",

priority = 2

},

{

id = "/subscriptions/XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX/resourceGroups/rg-demo-aoai/providers/Microsoft.ApiManagement/service/apim-demo-aoai-jog/backends/openai-2",

priority = 2

}

]

}

}

})

}Very cool right? This makes for way simpler APIM policy which means troubleshooting APIM policy that much easier. You could also establish different pools for different categories of applications. Maybe you have a pool with a PTU and standard tier instances for mission-critical production apps and another pool of only standard instances for non-production applications. You could then direct specific applications (based on their Entra ID service principal id) to different pools. This feature gives you a ton of flexibility in how you handle load balancing without a to of APIM policy overhead.

With the introduction of this feature into APIM, it makes APIM that much more of an appealing solution for this use case. No longer do you need a complex policy and in-depth APIM policy troubleshooting skills to make this work. Tack on the additional GenAI features Microsoft introduced that I mentioned earlier, as well as its existing features and capabilities available in APIM policy, you have a damn fine tool for your Generative AI Gateway use case.

Well folks that wraps up this post. I hope this overview gave you some insight into why load balancing is important with AOAI, what the historical challenges have been doing it within APIM, and how those challenges have been largely removed with the added bonus of additional new GenAI-based features make this a tool worth checking out.