Welcome back to part 2 of my series on Microsoft’s managed services offering of Azure Active Directory Domain Services (AAD DS). In my first post I covered so some of the basic configuration settings of the a default service instance. In this post I’m going to dig a bit deeper and look at network flows, what type of secure tunnels are available for LDAPS, and examine the authentication protocols and supporting cipher suites are configured for the service.

To perform these tests I leveraged a few different tools. For a port scanner I used Zenmap. To examine the protocols and cipher suites supported by the LDAPS service I used a custom openssl binary running on an Ubuntu VM in Azure. For examination of the authentication protocol support I used Samba’s smbclient running on the Ubuntu VM in combination with WinSCP for file transfer, tcpdump for packet capture, and WireShark for packet analysis.

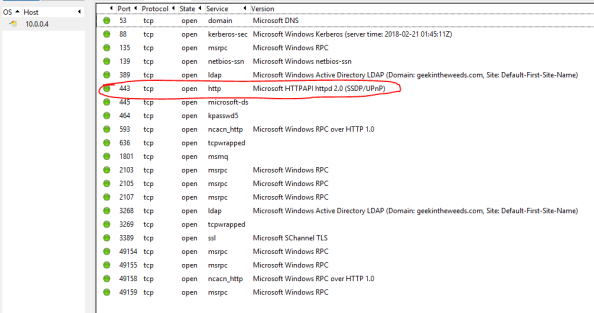

Let’s start off with examining the open ports since it takes the least amount of effort. To do that I start up Zenmap and set the target to one of the domain controllers (DCs) IP addresses, choose the intense profile (why not?), and hit scan. Once the scan is complete the results are displayed.

Navigating to the Ports / Hosts tab displays the open ports. All but one of them are straight out of the standard required ports you’d see open on a Windows Server functioning as an Active Directory DC. An opened port 443 deserves more investigation.

Let’s start with the obvious and attempt to hit the IP over an HTTPS connection but no luck there.

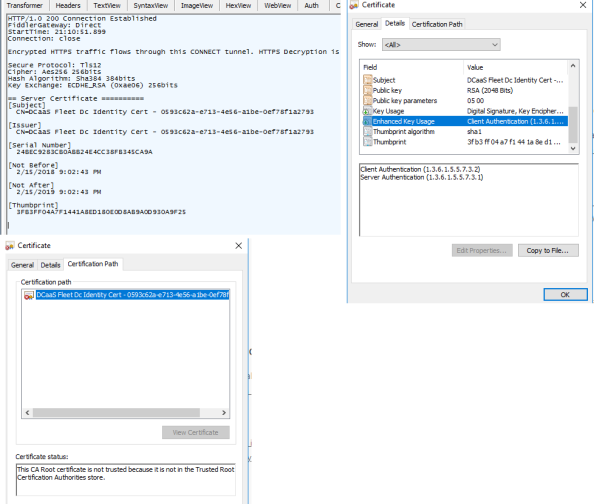

Let’s break out Fiddler and hit it again. If we look at the first session where we build the secure tunnel to the website we see some of the details for the certificate being used to secure the session. Opening the TextView tab of the response shows a Subject of CN=DCaaS Fleet Dc Identity Cert – 0593c62a-e713-4e56-a1be-0ef78f1a2793. Domain Controller-as-a-Service, I like it Microsoft. Additionally Fiddler identifies the web platform as the Microsoft HTTP Server API (HTTP.SYS). Microsoft has been doing a lot more that API since it’s much more lightweight than IIS. I wanted to take a closer look at the certificate so I opened the website in Dev mode in Chrome and exported it. The EKUs are normal for a standard use certificate and it’s self-signed and untrusted on my system. The fact that the certificate is untrusted and Microsoft isn’t rolling it out to domain-joined members tells me whatever service is running on the port isn’t for my consumption.

So what’s running on that port? I have no idea. The use of the HTTP Server API and a self-signed certificate with a subject specific to the managed domain service tells me it’s providing access to some type of internal management service Microsoft is using to orchestrate the managed domain controllers. If anyone has more info on this, I’d love to hear it.

Let’s now take a look at how Microsoft did at securing LDAPS connectivity to the managed domain. LDAPS is not enabled by default in the managed domain and needs to be configured through the Azure AD Domain Services blade per these instructions. Oddly enough Microsoft provides an option to expose LDAPS over the Internet. Why any sane human being would ever do this, I don’t know but we’ll cover that in a later post.

I wanted to test SSLv3 and up and I didn’t want to spend time manipulating registry entries on a Windows client so I decided to spin up an Ubuntu Server 17.10 VM in Azure. While the Ubuntu VM was spinning up, I created a certificate to be used for LDAPS using the PowerShell command referenced in the Microsoft article and enabled LDAPS through the Azure AD Domain Services resource in the Azure Portal. I did not enable LDAPS for the Internet for these initial tests.

After adding the certificate used by LDAPS to the trusted certificate store on the Windows Server, I opened LDP.EXE and tried establishing LDAPS connection over port 636 and we get a successful connection.

Once I verified the managed domain was now supporting LDAPS connections I switched over to the Ubuntu box via an SSH session. Ubuntu removed SSLv3 support in the OpenSSL binary that comes pre-packaged with Ubuntu so to test it I needed to build another OpenSSL binary. Thankfully some kind soul out there on the Interwebz documented how to do exactly that without overwriting the existing version. Before I could build a new binary I had to add re-install the Make package and add the Gnu Compiler Collection (GCC) package using the two commands below.

- sudo apt-get install –reinstall make

- sudo apt-get install gcc

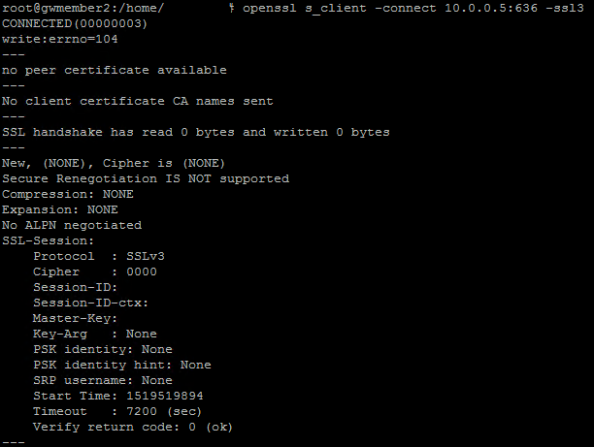

After the two packages were installed I built the new binary using the instructions in the link, tested the command, and validated the binary now includes SSLv3.

After Poodle hit the news back in 2014, Microsoft along with the rest of the tech industry advised SSLv3 be disabled. Thankfully this basic well known vulnerability has been covered and SSLv3 is disabled.

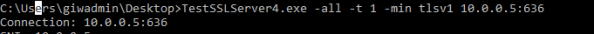

SSLv3 is disabled, but what about TLS 1.0, 1.1, and 1.2? How about the cipher suites? Are they aligned with NIST guidance? To test that I used a tool named TestSSLServer by Thomas Pornin. It’s a simple command line tool which makes cycling through the available cipher suites quick and easy.

The options I chose perform the following actions:

- -all -> Perform an “exhaustive” search across cipher suites

- -t 1 -> Space out the connections by one second

- -min tlsv1 -> Start with TLSv1

The command produces the output below.

TLSv1.0:

server selection: enforce server preferences

3f- (key: RSA) ECDHE_RSA_WITH_AES_256_CBC_SHA

3f- (key: RSA) ECDHE_RSA_WITH_AES_128_CBC_SHA

3f- (key: RSA) DHE_RSA_WITH_AES_256_CBC_SHA

3f- (key: RSA) DHE_RSA_WITH_AES_128_CBC_SHA

3– (key: RSA) RSA_WITH_AES_256_CBC_SHA

3– (key: RSA) RSA_WITH_AES_128_CBC_SHA

3– (key: RSA) RSA_WITH_3DES_EDE_CBC_SHA

3– (key: RSA) RSA_WITH_RC4_128_SHA

3– (key: RSA) RSA_WITH_RC4_128_MD5

TLSv1.1: idem

TLSv1.2:

server selection: enforce server preferences

3f- (key: RSA) ECDHE_RSA_WITH_AES_256_CBC_SHA384

3f- (key: RSA) ECDHE_RSA_WITH_AES_128_CBC_SHA256

3f- (key: RSA) ECDHE_RSA_WITH_AES_256_CBC_SHA

3f- (key: RSA) ECDHE_RSA_WITH_AES_128_CBC_SHA

3f- (key: RSA) DHE_RSA_WITH_AES_256_GCM_SHA384

3f- (key: RSA) DHE_RSA_WITH_AES_128_GCM_SHA256

3f- (key: RSA) DHE_RSA_WITH_AES_256_CBC_SHA

3f- (key: RSA) DHE_RSA_WITH_AES_128_CBC_SHA

3– (key: RSA) RSA_WITH_AES_256_GCM_SHA384

3– (key: RSA) RSA_WITH_AES_128_GCM_SHA256

3– (key: RSA) RSA_WITH_AES_256_CBC_SHA256

3– (key: RSA) RSA_WITH_AES_128_CBC_SHA256

3– (key: RSA) RSA_WITH_AES_256_CBC_SHA

3– (key: RSA) RSA_WITH_AES_128_CBC_SHA

3– (key: RSA) RSA_WITH_3DES_EDE_CBC_SHA

3– (key: RSA) RSA_WITH_RC4_128_SHA

3– (key: RSA) RSA_WITH_RC4_128_MD5

As can be seen from the bolded output above, Microsoft is still supporting the RC4 cipher suites in the managed domain. RC4 has been known to be a vulnerable algorithm for years now and it’s disappointing to see it still supported especially since I haven’t seen any options available to disable within the managed domain. While 3DES still has a fair amount of usage, there have been documented vulnerabilities and NIST plans to disallow it for TLS in the near future. While commercial customers may be more willing to deal with the continued use of these algorithms, government entities will not.

Let’s now jump over to Kerberos and check out what cipher suites are supported by the managed DC. For that we pull up ADUC and check the msDS-SupportedEncryptionTypes attribute of the DC’s computer object. The attribute is set to a value of 28, which is the default for Windows Server 2012 R2 DCs. In ADUC we can see that this value translates to support of the following algorithms:

• RC4_HMAC_MD5

• AES128_CTS_HMAC_SHA1

• AES256_CTS_HMAC_SHA1_96

Again we see more support for RC4 which should be a big no no in the year 2018. This is a risk that orgs using AAD DS will need to live with unless Microsoft adds some options to harden the managed DCs.

Last but not least I was curious if Microsoft had support for NTLMv1. By default Windows Server 2012 R2 supports NTLMv1 due to requirements for backwards compatibility. Microsoft has long recommended disabling NTLMv1 due to the documented issues with the security of the protocol. So has Microsoft followed their own advice in the AAD DS environment?

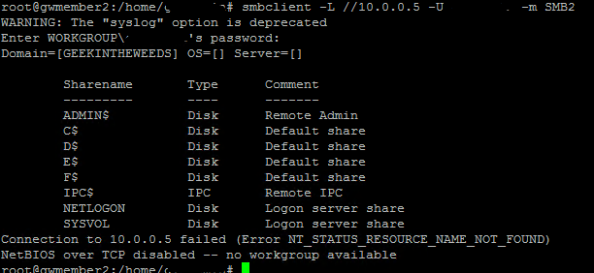

To check this I’m going use Samba’s smbclient package on the Ubuntu VM. I’ll use smbclient to connect to the DC’s share from the Ubuntu box using the NTLM protocol. Samba has enforced the use NTLMV2 in smbclient by default so I needed to make some modifications to the global section of the smb.conf file by adding client ntlmv2 auth = no. This option disables NTLMv2 on smbclient and will force it to use NTLMv1.

After saving the changes to smb.conf I exit back to the terminal and try opening a connection with smbclient. The options I used do the following:

- -L -> List the shares on my DC’s IP address

- -U -> My domain user name

- -m -> Use the SMB2 protocol

While I ran the command I also did a packet capture using tcpdump which I moved over to my Windows box using WinSCP. I then opened the capture with WireShark and navigated to the packet containing the Session Setup Request. In the parsed capture we don’t see an NTLMv2 Response which means NTLMv1 was used to authenticate to the domain controller indicating NTLMv1 is supported by the managed domain controllers.

Based upon what I’ve observed from poking around and running these tests I’m fairly confident Microsoft is using a very out-of-the-box configuration for the managed Windows Active Directory domain. There doesn’t seem to be much of an attempt to harden the domain against some of the older and well known risks. I don’t anticipate this offering being very appealing to organizations with strong security requirements. Microsoft should look to offer some hardening options that would be configurable through the Azure Portal. Those hardening options are going to need to include some type of access to the logs like I mentioned in my last post. Anyone who has tried to rid their network of insecure cipher suites or older authentication protocols knows the importance of access to the domain controller logs to the success of that type of effort.

My next post will be the final post in this series. I’ll cover the option Microsoft provides to expose LDAPS to the Internet (WHY OH WHY WOULD YOU DO THAT?), summarize my findings, and mention a few other interesting things I came across during the study for this series.

Thanks!

Hello Matthew

First off thank you for taking the time to write such an excellent blog 🙂

So would ADD-DS be suitable in the following situation

You have an App called Application-X which normally runs on a domain joined member server on a traditional on-premise AD

You want to host this Application-X in the cloud by standing up as IaaS and you need it to be on a Server which is part of an Active Directory domain, however you do not want to link or extend your on-premise AD domain to the cloud.

You have an existing AD Tenant

you need to give one or more security principals in your ADD-DS domain some level of access to Application-X but for easy of administration you want to use an existing principal in your Azure AD tenant therefore you sync Azure AD user User-X from Azure AD to ADD-DS and give the user access to the application.

Is my understanding from your blog about what is possible with ADD-DS correct so far?

If so I am wondering how can User-X get access to Application-X when sitting at their desk back in the office, potentially with no standard network route from on-premise office to this AAD-DS e.g. no express route of site-to-site VPN

For example I assume User-X authenticates to Azure AD so they now have an Azure AD token to prove their ID. However AAD-DS uses Kerberos (e.g. lets assume Application-X is not Claims aware) and therefore requires traditional AD on-premise protocols.

How does the user take their Azure AD token and in affect use it to access the Kerberos service ticket App in AAD-DS realm, do you for example need to stand up WAP (Web Application Proxy) joined to the AAD-DS domain to do PTA (pass through authentication) with WAP Pre-Authentication or using WIA (Windows Integrated Authentication) again via WAP.

Can you please help me understand this last part of the puzzle

Thanks very much in advance

Jo

LikeLike

Hi Jo,

Thanks for feedback! It’s great to know that someone is getting some value out of the blog.

Your understanding of AAD DS is correct in that one the purposes of the service is to provide for identity data and authentication/authorization services for legacy applications (ie apps dependent on LDAP, Kerberos/NTLM). It accomplishes this by sourcing identity data from an Azure AD tenant, effectively extending some level of capabilities outside of a modern identity data store (ie accessible via a web-based API) and authentication/authorization service (ie SAML, OAuth, Open ID Connect). You’d need to do more analysis of Application-X to determine if any of the limitations in AAD DS would be a show stopper (ie needs to be accessed by security principals in another domain forest, dependent on schema extensions, etc) but your basic example would be a viable use case to explore.

The second half of your question is really interesting, well written, and shows you have a good understanding of the concepts you’re asking about.

I’m looking forward to the chance to talk geek with you!

Based upon your description it looks like you have a requirement that no direct connection between your on-premises and Azure network will be established. This is going to mean your client is going to need to access your application via some endpoint exposed to the Internet (I’m assuming point-to-site VPNs are out of the question as well). You didn’t say whether the app is a native app or a web app, so I’m going to assume it’s a web app given you mentioned using the WAP.

The WAP would be one option to expose the application to the Internet. You could even use some network security groups to lock down access to the WAP to only your on-premises public address space to further mitigate the risk at layers 3 and 4.

You didn’t mention what authentication protocols your application supports, but I’m going to assume it’s a legacy protocol like Kerberos since you mentioned you have a dependency on AD DS. Given this limitation, I’m not sure how you could go about leveraging the security token the user received from Azure AD (id token and access token) with the WAP.

The challenge you need to solve in this scenario is achieving protocol transition converting that modern security token (Open ID Connect or OAuth) back to down a legacy security token (Kerberos).

Another option you could explore would be using the Azure AD Application Proxy. Azure AD Application Proxy has the capability of converting the security token received from Azure AD back down to a Kerberos ticket for the user. The pattern used by the proxy is to extract the UPN and SPN from the id token and access token and the S4U2Self/S4U2Proxy protocol extensions of Microsoft’s implementation of Kerberos are used to obtain a Kerbeors ticket on behalf the user which is then passed to the app and the user is granted access. Assuming you have the UPN defined for the user in Azure AD matching the UPN in your instance of AAD DS it should work. I think you’d also have to create a new user in AAD DS to represent your application’s identity such that you’d be able to set the service principal name attribute. I’ve never tried the Application Proxy with AAD DS so I can’t say for sure it would work, but it’d be the method I’d try. You’d also gain all the benefits of using Azure AD as the authentication front end including the behavior analytics, MFA, and conditional access.

I’m in the process of working on a series for Cloud App Security so my lab is in flux right now so I can’t quickly test it out. If you get a chance to test out either option, I’d be really interested in hearing your results.

LikeLike

By the way, I’d love to connect with you on LinkedIn if you’re on there.

https://www.linkedin.com/in/matthewfeltonma

LikeLike

Hi Matt (or do you prefer Mathew?)

Thanks for the comprehensive reply, Once I have found out more about the application this will help narrow in on a solution. I really like your idea of the “Azure AD Application Proxy” I have not used this either at the moment (will have to setup in my Azure LAB when I get a moment). Quite often (and I am sure others in IT have experienced this) as engineer you often get a requirement come down with little to no information, and have to work it out (which I quite like in most of the time)

Without going off topic, the Service for User to Self (S4U2Self) is an interesting one isn’t it as it does a partial’ logon to retrieve the security principals ID/Group SIDs in order to compare against ACLs etc. An interesting side effect of this is when you are checking the effective rights of another user (in the ADUC GUI for example) and therefore invoking S4U2Self which updates that users lastlogontimestamp (replicated) attribute, So the user may not of logged on for 4 weeks, but their lastlogontimestamp shows they logged on yesterday (e.g. when you checked their effective rights to some resource)

Your blog is excellent and the community really needs people like you to give back to others I am sure all your readers really appreciate your efforts. I actually connected with you via LinkedIn very recently but when I am on the internet I generally use a pseudonym (may seem a little odd I know, but just prefer that). Any way I will send you an email via LinkedIN (so keep an eye out for a message), and once again keep up the excellent work on your blog 🙂

Cheers

LikeLike