Today I’m taking a break from my AI series to cover an interesting topic that came up at a customer.

My customer base exists within the heavily regulated financial services industry which means a strong security posture. Many of these customers have requirements for inspection of network traffic which includes traffic between devices within their internal network space. This requirement gets interesting when talking inspection of traffic destined for a service behind a Private Endpoint. I’ve posted extensively on Private Endpoints on this blog, including how to perform traffic inspection in a traditional hub and and spoke network architecture. One area I hadn’t yet delved into was how to achieve this using Azure Virtual WAN (VWAN).

VWAN is Microsoft’s attempt to iterate on the hub and spoke networking architecture and make the management and setup of the networking more turnkey. Achieving that goal has been an uphill battle for VWAN with it historically requiring very complex architectures to achieve the network controls regulated industries strive for. There has been solid progress over the past few months with routing intent and support for additional third-party next generation firewalls running in the VWAN hub such as Palo Alto becoming available. These improvements have opened the doors for regulated customers to explore VWAN Secure Hubs as a substitute for a traditional hub and spoke. This brings us to our topic: How do VWAN Secure Hubs work when there is a requirement to inspect traffic destined for a Private Endpoint?

My first inclination when pondering this question was that it would work in the same way a traditional hub and spoke works. In past posts I’ve covered that pattern. You can take a look at this repository I’ve put together which walks through the protocol flows in detail if you’re curious. The short of it is inspection requires enabling network policies for the subnet the Private Endpoints are deployed to and SNATing at the firewall. The SNATing is required at the firewall because Private Endpoints do not obey user-defined routes defined in a route table. Without the SNAT you get asymmetric routing that becomes a nightmare of troubleshooting to identify. Making it even more confusing, some services like Azure Storage will magically keep traffic symmetric as I’ve covered in past posts. Best practice for traditional hub and spoke is SNATing for firewall inspection with Private Endpoints.

My first stop was to read through the Microsoft documentation. I came across this article first which walks through traffic inspection with Azure Firewall with a VWAN Secure Hub. As expected, the article states that SNAT is required (yes I’m aware of the exception for Azure Firewall Application Rules, but that is the exception and not the rule and very few in my customer space use Azure Firewall). Ok great, this aligns with my understanding. But wait, this article about Secure Hub with routing intent does not mention SNAT at all. So is SNAT required or not?

When public documentation isn’t consistent (which of course NEVER happens) it’s time to lab and see what we see. I threw together a single region VWAN Secure Hub with Azure Firewall, enabled routing intent for both Internet and Private traffic, and connected my home lab over a S2S VPN. I created then Private Endpoint for a Key Vault and Azure SQL resource. Per the latter article mentioned above, I enabled Private Endpoint Network Policies for the snet-svc subnet in the spoke virtual network. Finally, I created a single Network Rule allowing traffic for 443 and 1433 from my lab to the spoke virtual network. This ensured I didn’t run into the transparent proxy aspect of Application Rules throwing off my findings.

If you were doing this in the “real world” you’d setup a packet capture on the firewall and validate you see both sides of the conversation. If you’ve used Azure Firewall, you’re well aware it does not yet support packet captures making this impossible. Thankfully, Microsoft has recently introduced Azure Firewall Structure Firewall Logs which include a log called Azure Firewall Flow Trace Log. This log will show you the gooey details of the TCP conversation and helps to fill the gap of troubleshooting asymmetric traffic while Microsoft works on offering a packet capture capability (a man can dream, can’t he?).

While the rest of the Azure Firewall Structured Logs need nothing special to be configured, the Flow Trace Logs do (likely because as of 8/20/2023 they’re still in public preview). You need to follow the instructions located within this document. Make sure you give it a solid 30 minutes of completing the steps to enable the feature before you enable the log through the diagnostic settings of the Azure Firewall. Also, do not leave this running. Beyond the performance hit that can occur because of how chatty this log is, you could also be in a world of hurt for a big Log Analytics Workspace bill if you leave it running.

Once I had my lab deployed and the Flow Trace Logs working, I next went ahead testing using the Test-NetConnection PowerShell cmdlet from a Windows machine in my home lab. This is a wonderful cmdlet if you need something built-in to Windows to do a TCP Ping.

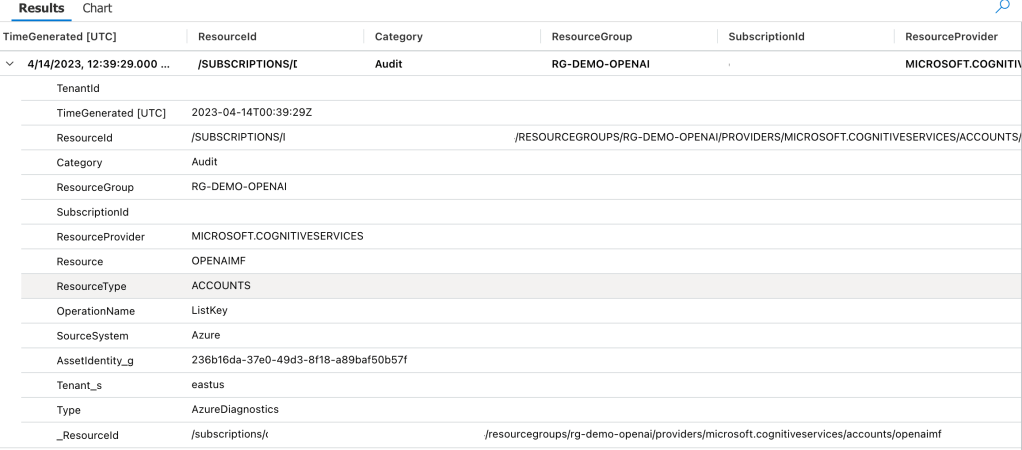

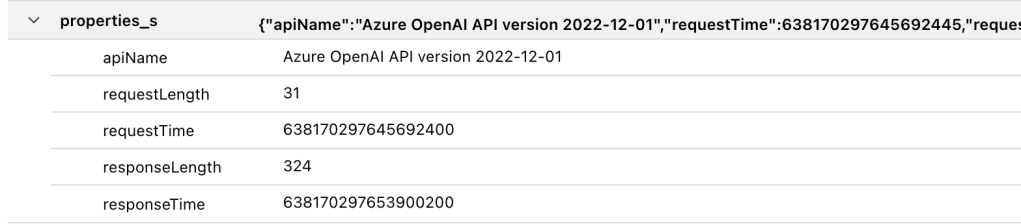

In the above image you can see that the TCP Ping to port 1433 of an Azure SQL database behind a Private Endpoint was successful. Review of the Azure Firewall Network Logs showed my Network Rule firing which tells me the TCP SYN at least passed through providing proof that Private Endpoint Network Policies were successfully affecting the traffic to the Private Endpoint.

What about return traffic? For that I went to the Flow Trace Logs. Oddly enough, the firewall was also receiving the SYN-ACK back from the Private Endpoint all without SNAT being configured. I repeated the test for a Azure Key Vault behind a Private Endpoint and observed the same behavior (and I’ve confirmed in the Azure Key Vault needs SNAT for return traffic in the past in a standard hub and spoke).

So is SNAT required or not? You’re likely expecting me to answer yes or no. Well today I’m going to surprise you with “I don’t know”. While testing with these two services in this architecture seemed to indicate it was not, I’ve circulated these findings within Microsoft and the recommendation to SNAT to ensure flow symmetry remains. As I’ve documented in prior posts, not all Azure services behave the same way with traffic symmetry and Azure Private Endpoints (Azure Storage for example) and for consistent purposes you should be SNATing. Do not rely on your testing of a few services in a very specific architecture as being gospel. You should be following the practices outlined in the documentation.

I feel like I’m ending this blog Sopranos-style with fade to black, but sometimes even tech has mystery. In this post you got a taste of how Flow Trace Logs can help troubleshoot traffic symmetry issues when using Azure Firewall and you learned that not all things in the cloud work the way you expect them to work. Sometimes that is intentional and sometimes it’s not intentional. When you run into this type of situation where behavior you’re observing doesn’t match documentation, it’s always best to do what is documented (in this case you should be doing SNAT). Maybe it’s something you’re doing wrong (this is me we’re talking about) or maybe you don’t have all the data (I tested 2 of 100+ services). If you go with what you experience, you risk that undocumented behavior being changed or corrected and then being in a heap of trouble in the middle of the night (oh the examples I could give of this across my time at cloud providers over a glass of Titos).

Well folks, that wraps things up. TLDR; SNAT until the documentation says otherwise regardless of what you experience.

Thanks!