This is part of my series on GenAI Services in Azure:

- Azure OpenAI Service – Infra and Security Stuff

- Azure OpenAI Service – Authentication

- Azure OpenAI Service – Authorization

- Azure OpenAI Service – Logging

- Azure OpenAI Service – Azure API Management and Entra ID

- Azure OpenAI Service – Granular Chargebacks

- Azure OpenAI Service – Load Balancing

- Azure OpenAI Service – Blocking API Key Access

- Azure OpenAI Service – Securing Azure OpenAI Studio

- Azure OpenAI Service – Challenge of Logging Streaming ChatCompletions

- Azure OpenAI Service – How To Get Insights By Collecting Logging Data

- Azure OpenAI Service – How To Handle Rate Limiting

- Azure OpenAI Service – Tracking Token Usage with APIM

- Azure AI Studio – Chat Playground and APIM

- Azure OpenAI Service – Streaming ChatCompletions and Token Consumption Tracking

- Azure OpenAI Service – Load Testing

Updates

- 5/28/2024 – Updated to mention that objectid of security principal is now included in native diagnostic logs

Hello again folks! Today I’m going to bounce back into AOAI (Azure OpenAI Service) mode and cover a topic that frequently comes up with my customers in regards to securing the service. Over the past year I’ve covered the infrastructure and security controls available within the AOAI. Much of that focus was on accessing the service through an SDK (software development kit) or directly through the API. Today I’m going to spend some time talking about the security controls available to secure the Azure OpenAI Studio, which I’m going to refer to as Studio for the rest of this post.

If you’re unfamiliar with Studio, it’s a GUI-based experience for interacting with the data plane of the AOAI service. In my authorization post, I cover the difference between the service’s management and data planes. At a high level, the management plane is for operations “on the service” while the data plane is for operations “in the service”.

Microsoft recommends using an SDK or the API directly when interacting with the data plane of the service. It’s a good recommendation because there are lots of knobs for you to turn to lock down the service and address gaps in the service. When the service is using through the Azure OpenAI Studio, you lose the ability to inject some type of control component between the user’s endpoint and the instance of AOAI. The rest of this post will cover why that is, what controls are available to you, and what risks you’ll have to accept if you opt to make Studio available to your users.

Before I jump into the details of what you get and what you don’t get, I want to cover what some of the main use cases are for using Studio versus accessing the service through an SDK or direct through the API. First and foremost, it should be obvious that GUIs are much more accessible than having to write code to interact with the API. For example, say I’m performing a PoC (proof-of-concept) of the service and I want to quickly test the gpt 3.5 model’s ability to answer a question in my field. For that I can use the Completions interface within Studio to get a chat-like interface with zero coding.

Another use case may be I want a simple way to test the models on my organization’s data to see if the models can provide value to that data. I don’t want to invest a ton of time coding to perform this functionality because I don’t yet know if the models will be able to provide any value on top of my data.

A lot of the use for Studio comes down to its simplicity of use. If you need to do some basic PoC with minimal funding, using Studio can be a nice shortcut to doing all the code you’d need to do in order to interact with the API to perform the actions.

Long story short, if you’re offering this service to your business units you’re likely going to be asked to provide Studio access to your users. My goal here is to help you understand the risks and mitigations of doing so.

So how does the Azure OpenAI Studio work? It appears to use an MVC (model-view-controller) architecture (or something similar to it for those application developer purists who are much smarter than me). In simple terms for non-developers like myself, the Studio application instructs the user’s browser which data plane endpoints to call and then provides a pretty view in the user’s browser of the responses received from those endpoints.

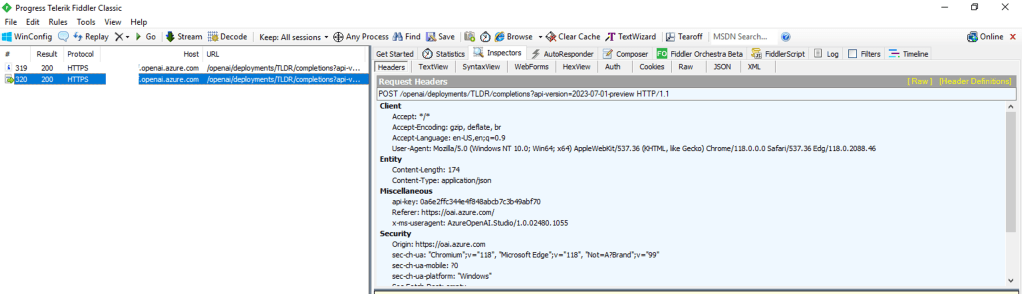

For you non-developers like myself, I find it helpful to perform an action within Studio and then review the Fiddler capture to observe what the browser did. In the Fiddler capture below, I used the Chat Completion interface in Studio to send a request a completion. You can see that the request was sent from my browser to the data plane endpoint of the service (openai.azure.com).

This trait of the Studio can work to your advantage when you need to secure the Studio. If the calls are made from the user’s endpoint to the data plane, then that means network controls around the data plane can be used to enforce control over access to the Studio for the instance of AOAI. As I covered in my prior posts, AOAI is no different from other Microsoft PaaS services and provides the standard network controls which include the service firewall and support for Private Endpoints.

A common security standard for organizations using Azure is to use Private Endpoints for PaaS services. Private Endpoints allow you to restrict access to the public IP of a PaaS service and limit it to access through an endpoint deployed in the customer’s virtual network. Accessing the service through the Private Endpoint requires the user’s endpoint to be within your organization’s private network. This means by creating a Private Endpoint you can block access to access to the AOAI instance through Studio to endpoints within your private network. If the user attempts to access the AOAI instance through Studio outside of the private network, they’ll be blocked and will receive the error you see below.

Placing your AOAI instance behind a Private Endpoint will be your primary means of controlling access to an AOAI instance through Studio. Creating the private endpoint and blocking public access keeps user’s from accessing the AOAI instance through Studio when hitting the public IP. However, users can still reach the AOAI instance through Studio if they are on the private network. You can lock that down by wrapping an NSG (Network Security Group) around the subnet containing the Private Endpoint, turning on Private Endpoint Network Policies in the subnet, and placing some type of mediator (such an Azure API Management instance) between the user’s endpoint and the AOAI instance. That will restrict the users to the API when interacting with the AOAI instance.

Outside of network controls, you don’t have much ability to control Studio access. There are no specific RBAC permissions that I’m aware of today that could be stripped from an RBAC role to prevent access to Studio. When it comes to authorization you should strive for least privilege as you’ve always done. My authorization blog has some guidance on how to handle that within the service.

Now that you understand what controls you have, let’s talk about the risks you’re going to need to accept if you plan on granting users access to Studio.

First and foremost, you’re going to need to accept the very basic logging provided by the diagnostics logging available within an AOAI instance. As I cover in the linked post above, the logging is minimal. Prompts and responses will not be logged, traceability in the logs will be limited, and you won’t get metrics as to token usage per call. The lack of visibility into prompts and responses becomes all that much more critical if shut off the built in content filtering and abuse monitoring.

Next up, you won’t have the ability to limit the usage of service on a per user or per app basis. AOAI has API limits around requests and tokens. There are capabilities today to control this on per user or per app basis within a single instance of AOAI today.

Let me summarize what we covered today:

- Network controls are your primary means to securing access to an AOAI instance through the Azure OpenAI Studio

- Placing an AOAI instance behind a Private Endpoint and blocking public access restrict Azure OpenAI Studio access to the AOAI instance to endpoints within your private network

- Azure OpenAI Studio access to an AOAI instance can be blocked completely by placing the AOAI instance behind a private endpoint, inserting some sort of mediation solution (such as API Management, and wrapping an NSG around the subnet containing the Private Endpoint which blocks all access but traffic from the mediator.

- Exercise least privilege using Azure RBAC but be aware there is no specific permission that allows access to the Azure OpenAI Studio

- The diagnostic logs provided limited information. Prompts and responses are not logged to the diagnostic logs and neither are token consumption. The former will mean you don’t have visibility into the prompts users are making (think abuse, inclusion of PII, etc) and the latter means you won’t be able to tell who is creating the costs within the instance.

Considering all of the above, my recommendation to customers it to establish an approval process for usage of Studio and ensure there is a strong business need to justify accepting the risks outlined above. The lack of logging is the real gut punch for me. That is a lot of risk, especially since most regulated orgs opt out of content filtering and abuse monitoring.

Nothing to fancy in this post, but hopefully it helps some folks better understand the security options for Azure OpenAI Studio access.

Thanks!