This is part of my series on GenAI Services in Azure:

- Azure OpenAI Service – Infra and Security Stuff

- Azure OpenAI Service – Authentication

- Azure OpenAI Service – Authorization

- Azure OpenAI Service – Logging

- Azure OpenAI Service – Azure API Management and Entra ID

- Azure OpenAI Service – Granular Chargebacks

- Azure OpenAI Service – Load Balancing

- Azure OpenAI Service – Blocking API Key Access

- Azure OpenAI Service – Securing Azure OpenAI Studio

- Azure OpenAI Service – Challenge of Logging Streaming ChatCompletions

- Azure OpenAI Service – How To Get Insights By Collecting Logging Data

- Azure OpenAI Service – How To Handle Rate Limiting

- Azure OpenAI Service – Tracking Token Usage with APIM

- Azure AI Studio – Chat Playground and APIM

- Azure OpenAI Service – Streaming ChatCompletions and Token Consumption Tracking

- Azure OpenAI Service – Load Testing

Yeah, yeah, yeah, I missed posting in July. I have been appropriately shamed on a daily basis by WordPress reminders.

I’m going to make up for it today by covering another of the “Generative AI Gateway” features of APIM (Azure API Management) that were announced a few months back. I’ve already covered the circuit breaker and load balancing and the token-based rate limiting features. These two features have made it far easier to distribute and control the usage of the AOAI (Azure OpenAI Service) that is being offered as a core enterprise service. One of the challenges that isn’t addressed by those features is charge backs.

As I’ve covered in prior posts, you can get away with an instance or two of AOAI dedicated to an app when you have one or two applications at the POC (proof-of-concept) stage. Capacity and charge back isn’t an issue in that model. However, your volume of applications will grow as well as the capacity of tokens and requests those applications require as they move to production. This necessitates AOAI being offered as a core foundational service as basic as DNS or networking. The patterns for doing this involve centrally distributing requests across several instances of AOAI spread across different regions and subscriptions using a feature like the circuit breaker and load balancing features of APIM. Once you have several applications drawing from a common pool, you then need to control how much each of those applications can consume using a feature like the token-based rate limiting feature of APIM.

Wonderful! You’ve built a service that has significant capacity and can service your BUs from a central endpoint. Very cool, but how are you gonna determine who is consuming what volume?

You may think, “That information is returned in the response. I can have the developers use a common code snippet to send that information for each response to a central database where I can track it.” Yeah nah, that ain’t gonna work. First, you ain’t ever gonna get that level of consistency across your enterprise (if you do have this, drop me an email because I want to work there). Second, as of today, the APIs do not return the number of tokens used for streaming based chat completions which will be a large majority of what is being sent to the models.

I know you, and you’re determined. You follow-up with, “Well Matt, I’m simply going to pull the native metrics from each of the AOAI instances I’m load balancing to.” Well yeah, you could do that but guess what? Those only show you the total consumed across the instance and do not provide a dimension for you to determine how much of that total was related to a specific application.

“Well Matt, I’m going to configure diagnostic logging for each of my AOAI instances and check off the Request and Response Logs. Surely that information will be in there!”. You don’t quit do you? Let me shatter your hopes yet again, no that will not work. As I’ve covered in a prior post while the logs do contain the Entra ID object ID (assuming you used Entra ID-based authentication) you won’t find any token counts in those logs either.

Well fine then, you’re going to use a custom logging solution to capture token usage when it’s returned by the API and calculate it when it isn’t. While yes this does work and does provide a number of additional benefits beyond information for charge backs (and I’m a fan of this pattern) it takes some custom code development and some APIM policy snippet expertise. What if there was an easier way?

That is where the token metrics feature of APIM really shines. This feature allows you to configure APIM to emit a custom metric for the tokens consumed by a Completion, Chat Completion (EVEN STREAMING!!), or Embeddings API call to an AOAI backend with a very basic APIM Policy snippet. You can even add custom dimensions and that is where this feature gets really powerful.

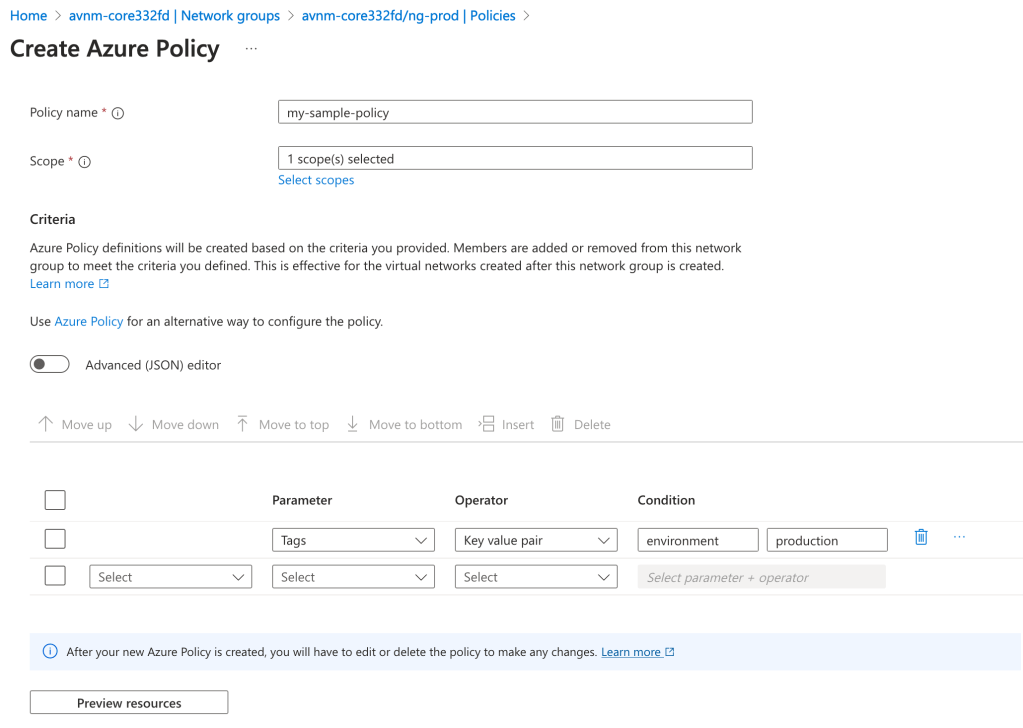

The first step in setting this up is to spin up an instance of Application Insights (if your APIM isn’t already hooked into one) and a Log Analytics Workspace the Application Insights instance will be associated with. Once your App Insights instance is created, you need to modify the settings API in APIM you’ve defined for AOAI and turn on the App Insights integration and enable custom metrics as seen below.

Next up, you need to modify your APIM policy. In the APIM Policy snippet below I extra a few pieces of data from the request and add them as dimensions to the custom metric. Here I’m extracting the Entra ID app id of security principal accessing the AOAI service (this would be the application’s identity if you’re using Entra ID authentication to the AOAI service) and the model deployment name being called from AOAI which I’ve standardized to be the same as the model name.

<!-- Extract the application id from the Entra ID access token -->

<set-variable name="appId" value="@(context.Request.Headers.GetValueOrDefault("Authorization",string.Empty).Split(' ').Last().AsJwt().Claims.GetValueOrDefault("appid", string.Empty))" />

<!-- Extract the model name from the URL -->

<set-variable name="uriPath" value="@(context.Request.OriginalUrl.Path)" />

<set-variable name="deploymentName" value="@(System.Text.RegularExpressions.Regex.Match((string)context.Variables["uriPath"], "/deployments/([^/]+)").Groups[1].Value)" />

<!-- Emit token metrics to Application Insights -->

<azure-openai-emit-token-metric namespace="openai-metrics">

<dimension name="model" value="@(context.Variables.GetValueOrDefault<string>("deploymentName","None"))" />

<dimension name="client_ip" value="@(context.Request.IpAddress)" />

<dimension name="appId" value="@(context.Variables.GetValueOrDefault<string>("appId","00000000-0000-0000-0000-000000000000"))" />

</azure-openai-emit-token-metric>After making a few calls from my code to APIM, the metrics begin to populate in the App Insights instance. To view those metrics you’ll want to go into the App Insights blade and go to the Monitoring -> Metrics section. Under the Metrics Namespace drop down you’ll see the namespace you’ve created in the policy snippet. I named mine openai-metrics.

I can now select metrics based on prompt tokens, completion tokens, and total tokens consumed. Here I select the completion tokens and split the data by the appId, client IP address, and model to give me a view of how many tokens each app is consuming and of which model at any given time span.

Very cool right?

As of today, there are some key limitations to be aware of:

- Only Chat Completions, Completions, and Embedding API operations are supported today.

- Each API operation is further limited by which models it supports. For example, as of August 2024, Chat Completions only supports gpt-3.5 and gpt-4. No 4o support yet unfortunately.

- If you’re using a load balanced pool backend, you can’t yet use the actual backend the pool send the request to as a dimension.

Well folks, hopefully this helps you better understand why this functionality was added and the value it provides. While you could do this with another API Gateway (pick your favorite), it likely won’t be as simple as it it with APIM’s policy snippet. Another win for cloud native I guess!

Thanks!