This is part of my series on GenAI Services in Azure:

- Azure OpenAI Service – Infra and Security Stuff

- Azure OpenAI Service – Authentication

- Azure OpenAI Service – Authorization

- Azure OpenAI Service – Logging

- Azure OpenAI Service – Azure API Management and Entra ID

- Azure OpenAI Service – Granular Chargebacks

- Azure OpenAI Service – Load Balancing

- Azure OpenAI Service – Blocking API Key Access

- Azure OpenAI Service – Securing Azure OpenAI Studio

- Azure OpenAI Service – Challenge of Logging Streaming ChatCompletions

- Azure OpenAI Service – How To Get Insights By Collecting Logging Data

- Azure OpenAI Service – How To Handle Rate Limiting

- Azure OpenAI Service – Tracking Token Usage with APIM

- Azure AI Studio – Chat Playground and APIM

- Azure OpenAI Service – Streaming ChatCompletions and Token Consumption Tracking

- Azure OpenAI Service – Load Testing

Updates:

- 9/25/2024 – Calculating streaming token usage just got easier! Check out my updated article on the topic

- 5/17/2024 – My beefed up version of Shaun’s awesome solution to handle logging streaming ChatCompletions

- 5/17/2024 – PowerProxy – An alternative solution developed by some wonderful folks at Microsoft

Hello again fellow geeks. Today I’m back with another Azure OpenAI Service (AOAI) post. I’ve talked in the past about the gaps in the native logging for the AOAI service and how the logs lack traceability and details on token usage to be used for chargebacks. I was lucky enough to work with Jake Wang and others on a reference architecture that could address these gaps using Azure API Manager (APIM). I also wrote some custom APIM policies to provide examples for how this information could be captured within APIM. I’ve observed customers coming up with creative solutions such as capturing the data within the application sitting in front of AOAI as a tactical means to get this data while more strategically using third-party API Gateway products such as Apigee, or even building custom highly functional and complex gateways. However, there was a use case that some of these solutions (such as the custom policies I wrote) didn’t account for, and that was streaming completions.

Like OpenAI’s API, the AOAI service API offers support for streaming chat completions. Streaming completions return the model’s completion as a series as events as the tokens are processed versus a non-streaming completion which returns the entire completion once the model is finished processing. The benefit of a streaming completion is a better user experience. There have been studies that show that any delay longer than 10 seconds won’t hold user attention. By streaming the completion as it’s generated the user is receiving that feedback that the website is responding.

The OpenAI documentation points out a few challenges when using streaming completions. One of those challenges is the response from the API no longer includes token usage, which means you need to calculate token usage by some other means such as using OpenAI’s open source tokeniser tiktoken. It also makes it difficult to moderate content because only partial completions are received in each event. Outside of those challenges, there is also a challenge when using APIM. As my peer Shaun Callighan points out, Microsoft does not recommend logging the request/response body when dealing with a stream of server-events such as the API is returning with streaming chat completions because it can cause unexpected buffering (which it does with streaming chat completions). This means the application user will not get the behavior the application owner intended them to get. In my testing, nothing was returned until model finished the completion.

If using the Python SDK, you can make a chat completion streaming by adding the stream=true property to the ChatCompletion object as seen below.

response = openai.ChatCompletion.create(

engine=DEPLOYMENT_NAME,

messages=[

{

"role": "user",

"content": "Write me a bedtime story"

}

],

max_tokens=300,

stream=True

)The body of the response includes a series of server-events such as the below.

...

data: {"id":"chatcmpl-8JNDagQPDWjNWOgbUm9u5lRxcmzIw","object":"chat.completion.chunk","created":1699628174,"model":"gpt-35-turbo","choices":[{"index":0,"finish_reason":null,"delta":{"content":"Once"}}],"usage":null}

data: {"id":"chatcmpl-8JNDagQPDWjNWOgbUm9u5lRxcmzIw","object":"chat.completion.chunk","created":1699628174,"model":"gpt-35-turbo","choices":[{"index":0,"finish_reason":null,"delta":{"content":" upon"}}],"usage":null}

data: {"id":"chatcmpl-8JNDagQPDWjNWOgbUm9u5lRxcmzIw","object":"chat.completion.chunk","created":1699628174,"model":"gpt-35-turbo","choices":[{"index":0,"finish_reason":null,"delta":{"content":" a"}}],"usage":null}

...

So how do you deal with this if you are or were planning to use APIM for logging, load balancing, authorization, and throttling? You have a few options.

- You can move logging into the application and use APIM only for load balancing, authorization, and throttling.

- You can insert a proxy logging solution behind APIM to handle logging of both streaming and non-streaming completions and use APIM only for load balancing, authorization, and throttling.

- You can block streaming completions at APIM.

Option 1

Option 1 is workable at a small scale and is a good tactical solution if you need to get something out to production quickly. The challenge with this option is enforcing it at scale. If you have amazing governance within your organization and excellent SDLC maybe you can enforce this. In my experience, few organizations have the level of maturity needed for this. The other problem with this is ideally logging for the purposes of compliance should be implemented and enforced by another entity to ensure separation of duties.

Benefits

- Quick and easy to put in place.

Considerations

- Difficult to enforce at scale.

- Puts the developers in charge of enforcing logging on themselves. Could be an issue with separation of duties.

Option 2

Option 2 is an interesting solution that my peer Shaun Callighan came up. In Shaun’s architecture a proxy-type solution is placed between APIM and AOAI and that solution handles parsing the requests and responses, calculating token usage, and logging the information to an Event Hub. They have even been kind enough to provide a sample solution demonstrating how this could be done with an Azure Function.

Benefits

- Allows you to use continue using APIM for the benefits around load balancing, authorization, and throttling.

- Supports streaming chat completions.

- Provides the logging necessary for compliance and chargebacks for both streaming and non-streaming chat completions.

- Centralized enforcement of logging.

Considerations

- You will need to develop your own code to parse the responses/responses, calculate chargebacks, and deliver the logs to Event Hub. (You could use Shaun’s code as a starting point)

- You’ll need to ensure this proxy does not become a bottleneck. It will need to scale as requests to the AOAI instance scale along with APIM and whatever else you have in path of the user’s request.

Option 3

Option 3 is another valid option (and honestly a simple fix IMO) and may be where some customers end up in the near term. With this option you block the use of streaming completions at APIM with a custom policy snippet like below. If the developers are worried about the user experience, there is always the option to flash a “processing”-like message in the text window while the model processes the completion.

Benefits

- Allows you to continue using APIM for logging, load balancing, throttling, and authorization.

- No new code introduced.

- Centralized enforcement of logging.

- No additional bottlenecks.

Considerations

- Your developers may hate you for this.

- There may be a legitimate use case where stream chat completions are required.

Since Shaun has a proof-of-concept example for option 2, I figured I’d showcase a sample APIM policy snippet for option 3. In the APIM policy snippet below, I determine if the stream property is included in the request body and store the value in a variable (it will be true or false). I then check the variable to see if the value is true, and if so I return a 404 status code with the message that streaming chat completions are not allowed.

<!-- Capture the value of the streaming property if it is included -->

<choose>

<when condition="@(context.Request.Body.As<JObject>(true)["stream"] != null && context.Request.Body.As<JObject>(true)["stream"].Type != JTokenType.Null)">

<set-variable name="isStream" value="@{

var content = (context.Request.Body?.As<JObject>(true));

string streamValue = content["stream"].ToString();

return streamValue;

}" />

</when>

</choose>

<!-- Blocks streaming completions and returns 404 -->

<choose>

<when condition="@(context.Variables.GetValueOrDefault<string>("isStream","false").Equals("true", StringComparison.OrdinalIgnoreCase))">

<return-response>

<set-status code="404" reason="BlockStreaming" />

<set-header name="Microsoft-Azure-Api-Management-Correlation-Id" exists-action="override">

<value>@{return Guid.NewGuid().ToString();}</value>

</set-header>

<set-body>Streaming chat completions are not allowed by this organization.</set-body>

</return-response>

</when>

</choose>If you ignore streaming chat completions and try to use a policy such as this one, the model will complete the completion but APIM will throw a 500 status code back at the developer because the structure of a streaming response doesn’t look like the structure of a non-streaming response and it can’t be parsed using that policy’s logic. This means you’ll be throwing money out of the window and potentially struggling with troubleshooting root cause. TLDR, pick an option above to deal with streaming and get it in place if you’re using APIM for logging today or plan to.

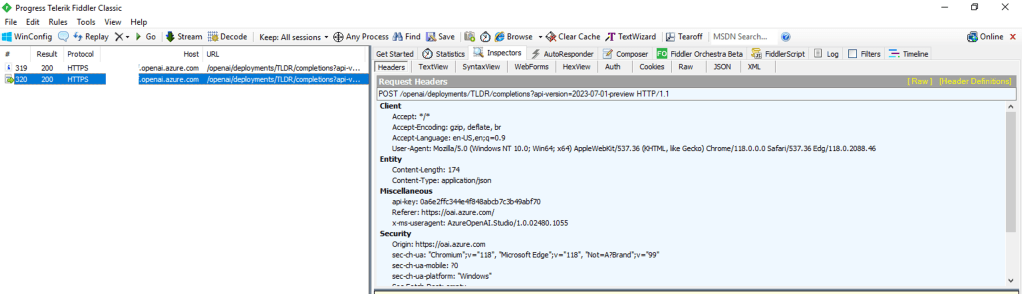

Last but not least, I want to link to a wonderful policy snippet by Shaun Callighan. This policy snippet dumps the trace logs from APIM into the headers returned in the response from APIM. This is incredibly helpful when troubleshooting a 500 status code returned by APIM.

Well folks, that wraps up this short blog post on this Friday afternoon. Have a great weekend and happy holidays!